The interplay between energy and artificial intelligence (AI) is a paradox of progress: AI devours power to fuel innovation, yet it holds the key to solving our energy woes. As we push AI to new heights, we’re hitting stark limits: hardware shortages, overstretched power grids, and an insatiable demand that efficiency alone can’t tame. Microsoft’s Satya Nadella, reflecting on DeepSeek’s release, invoked Jevons Paradox to warn us: cheaper AI doesn’t shrink consumption; it explodes it. Enter Large Quantitative Models (LQMs), a new breed of AI that could bridge this gap, not just consuming energy, but optimizing it.

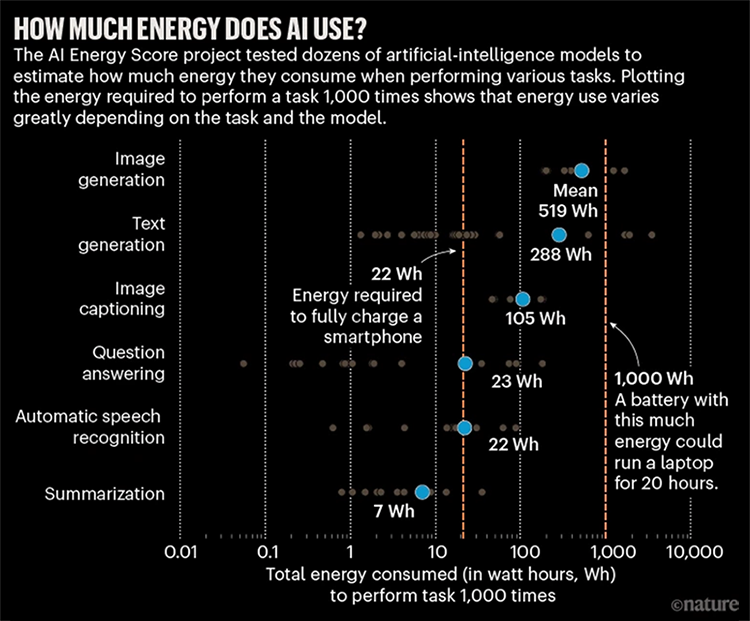

Picture this: Every AI-generated image: a meme, a digital masterpiece, or a synthetic portrait, comes with an energy price tag. Generating one image burns about 0.011 kilowatt-hours (kWh), akin to charging your phone. Now, imagine the scale: if we modestly assume the world churns out 1 million images per minute, that’s 11 megawatt-hours (MWh) per minute, or 0.66 gigawatt-hours (GWh) per hour. Over a day, that’s 15.84 GWh, which is enough juice to light up a small city – and that’s just from generating images. Add in text, video, and simulations, and the tally soars.

Powering this AI frenzy are graphics processing units (GPUs), the unsung heroes of modern compute. In 2023 alone, NVIDIA shipped roughly 3.76 million additional GPUs to data centers. At peak, each H100 chip gulps 700 watts, so 5 million GPUs running flat-out demand 3.5 gigawatts (GW), the output of a hefty nuclear plant. The problem is, we can’t keep up. Demand for AI outstrips GPU supply, with NVIDIA’s production lagging behind the hype. Even if we doubled the fleet overnight, we’d hit another wall: energy availability.

To ensure utility companies can reliably provide the power and manage grid stability, data centers, where most AI operations are housed, are capped by power contracts that specify a maximum amount of electricity they can consume. However, data center power needs are expected to increase three times by 2030, requiring more than 80 GW of demand. That’s a big number. That is, until you see AI’s trajectory. According to a recent U.S. Department of Energy report, massive deployments of accelerated AI servers have tripled electricity demand, from 60 terawatt-hours (TWh) in 2016 to 176 TWh in 2023, and are projected to double or triple by 2028. In states like Virginia, where tax breaks for data-center firms have attracted activity to the state, the electricity infrastructure is already showing strain. While some are trying to source their own power supplies, reports indicate that it would be necessary to more than double the annual number of new solar installations and surpass the capabilities of existing offshore wind sites to meet energy demands.

This energy bottleneck is why tech titans are buying or building their own power plants, with Microsoft rebooting Three Mile Island, Amazon pouring billions into small modular reactors (SMRs), and Google eyeing similar moves. Nuclear’s allure? It’s reliable, scalable, carbon-light, and perfect for AI’s 24/7 appetite. These hyperscalers aren’t just chasing AI market share; they’re securing a lifeline for their data centers. It’s a clear signal: technology’s growth hinges on energy, and we’re racing to plug the gap.

Then came DeepSeek’s R1 model in January 2025. Satya Nadella took to X with a reality check: “Jevons Paradox strikes again! As AI gets more efficient and accessible, we will see its use skyrocket, turning it into a commodity we just can’t get enough of.” He’s channeling nineteenth-century English neoclassical economist William Stanley Jevons, who saw efficient steam engines spike coal use, not less. DeepSeek’s breakthrough won’t cut AI’s energy bill, it’ll balloon it. Lower costs mean more users, more apps, more power draw. Efficiency fuels excess and demand.

So, how do we break this cycle? Enter Large Quantitative Models (LQMs), Quantitative AI systems that create and innovate based on principles of physics, chemistry and math in addition to language. Unlike generative models that churn out content, LQMs excel at crunching vast datasets with statistical precision, predicting outcomes, and streamlining systems.

LQM’s can solve for the physics and materials science bottlenecks limiting today’s energy supply. Right now, LQMs are impacting the battery sector by helping manufacturers identify and optimize new battery chemistries, materials and designs, significantly decrease cell end-of-life predictions from years to months or weeks, and improve shelf-life prediction capabilities. In this way, the symbiosis shifts: energy powers AI, and AI –via LQMs – can potentially re-engineer energy production, distribution, and use.

We’re at a tipping point. AI’s appetite is outpacing our ability to fuel its capabilities. Hyperscalers going nuclear is bold proof that energy is tech’s lifeline, but Jevons Paradox reminds us that improving efficiency will only increase demand. LQMs could offer a way out by helping design a future where energy is abundant, available on-demand, and sustainable. The more our customers and partners invest in LQMs for energy-related materials science projects, the closer we come to realizing this dream and removing the energy shackles that restrict AI’s true potential.

Talk to us today to explore how LQMs can power your materials science and energy initiatives.